Monty Python is awesome. But there is one thing the Brits gave to the world that is even better: the ZX Spectrum.

Introduced in 1982, the ZX Spectrum was a computer that sparked the home computer revolution in Britain and, due to its affordability, in many other European countries. The basic version had 16 KB of RAM, while the enhanced version boasted an immense 48 KB.

Though I’ve never owned a ZX Spectrum, it is close to my heart. It was the first computer I had a chance to work with. The computer club I was going to as a kid had a dozen of these computers, and we couldn’t wait for the teacher to finally stop talking and let us play some games.

Fast forward 40 years, and while browsing eBay, a brand-new replacement case for a ZX Spectrum showed up in my search results. The memories came back, and I impulse-bought it immediately. I didn’t have any plan for it, but once I got it, I knew I had to put it to good use, i.e., build a ZX Spectrum replica.

Getting started

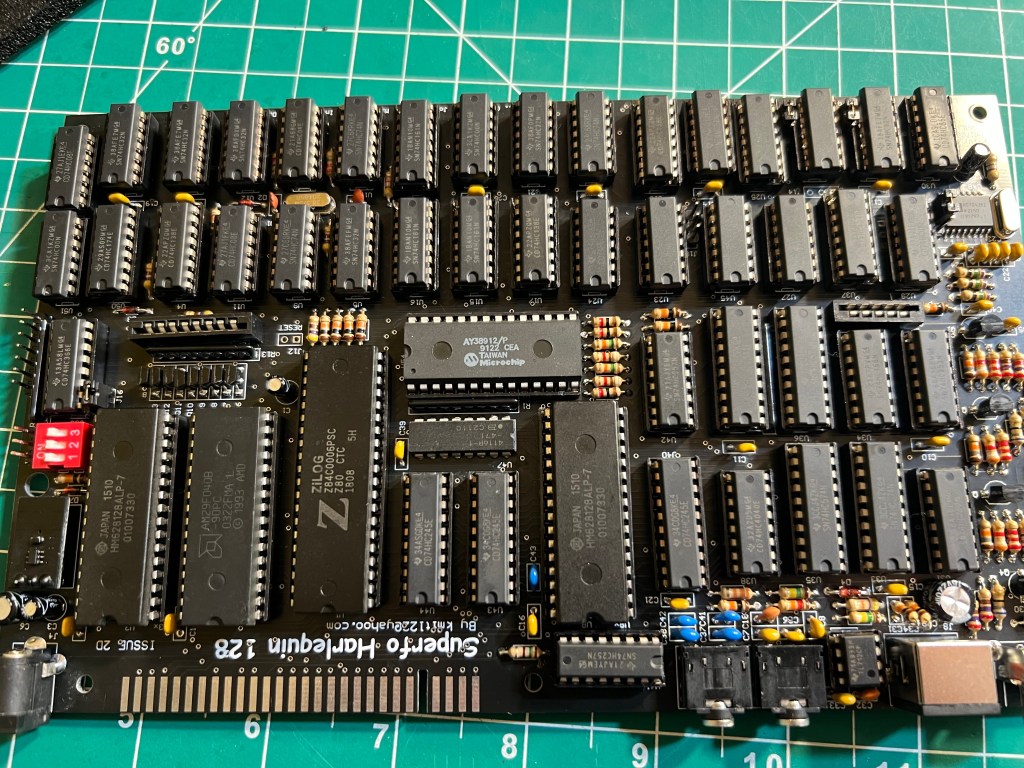

A nice case is a good start, but what goes inside is key. I found a few interesting options, but the Harlequin 128K Kit from ByteDelight was by far the best. This kit contained everything I needed to build a fully functional ZX Spectrum.

When I received my Harlequin 128K Kit, I felt overwhelmed. While the ZX Spectrum had only a handful of chips (not counting RAM), this kit had more than 50. There is a good reason for this – the kit uses widely available, off-the-shelf chips to emulate the ZX Spectrum’s ULA (Uncommitted Logic Array) specialized chip that went out of production almost 40 years ago.

I had never done much soldering, and the 50+ chips alone meant hundreds of solder joints. But there were also resistors, diodes, transistors, etc. The website promised it would be a fun challenge and that basic skills and creativity were enough to pull it off. These were encouraging words, but what the website didn’t mention was that a single mistake would ruin it all.

To make the assembly process easier, the kit parts were divided into 40 or so clearly labeled bags and provided with the recommended installation order. The detailed assembly guide included additional hints and called out easy-to-miss gotchas. Given my skill level, I found the kit’s organization extremely helpful.

First Try

The instructions said the soldering would take a few hours. Indeed, I spent a few hours a day for a week building the board. I followed the assembly steps very closely because I knew troubleshooting any non-obvious issues would be beyond my abilities. Eventually, I emerged from my garage with the board, anxious to try it.

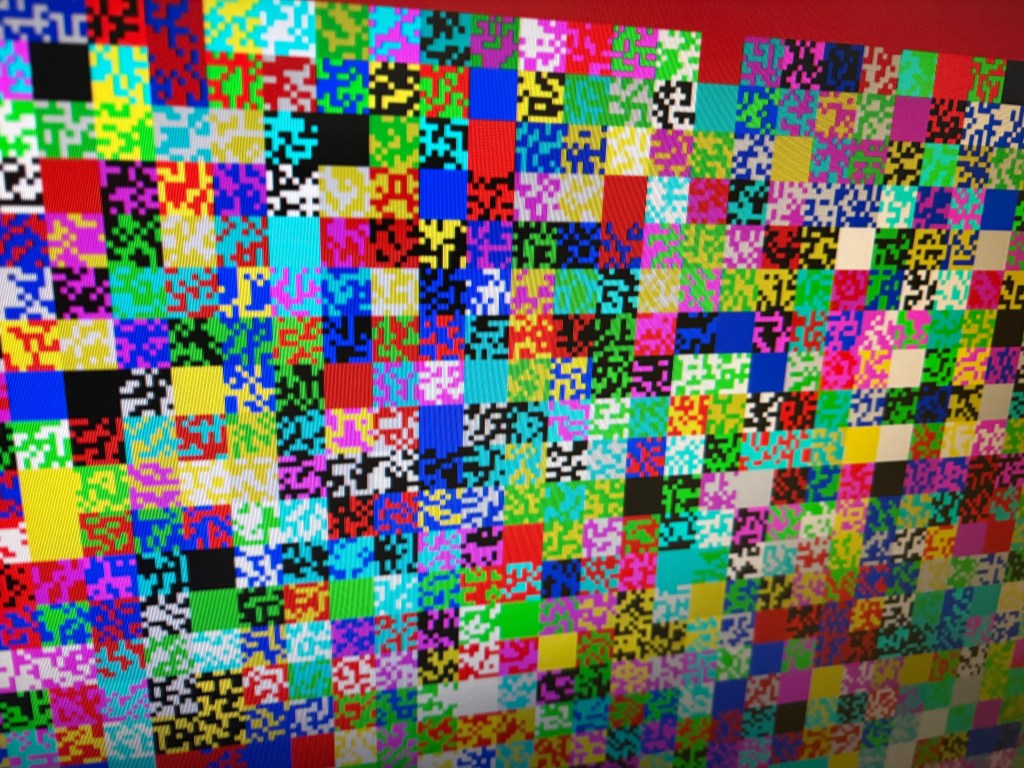

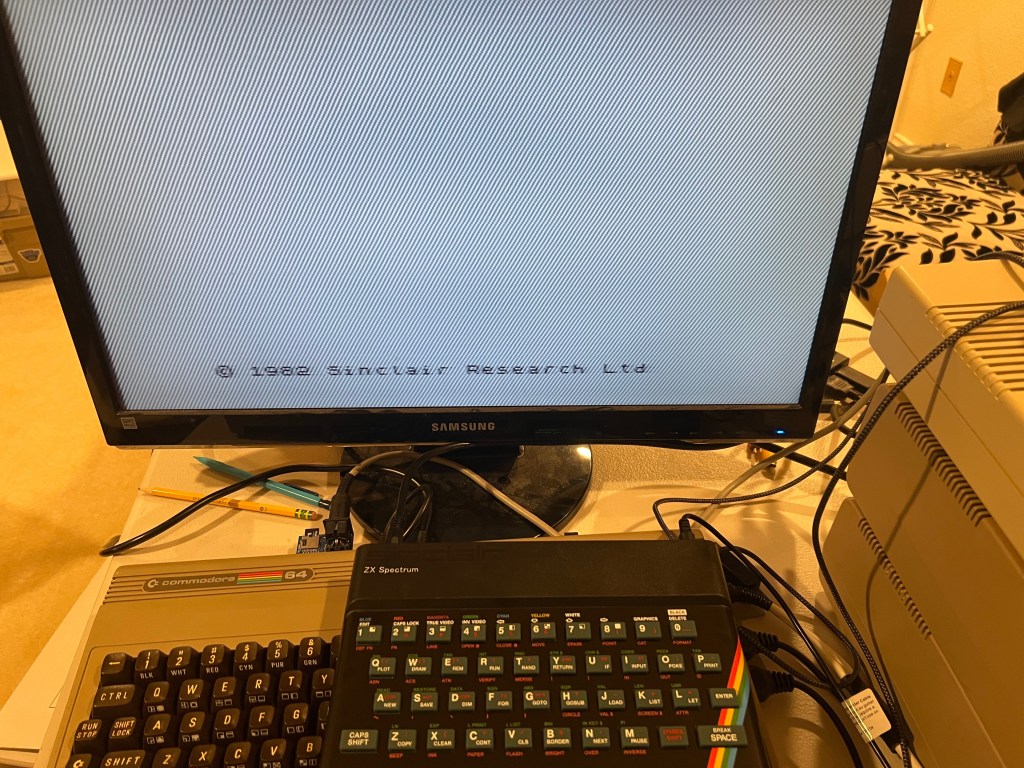

I connected it, and what I saw made my heart sink.

If the board had been completely dead, I might have hoped I had missed a connection, but what I saw indicated a subtle issue I doubted I could fix. A quick Internet search only confirmed my suspicion. A few people reported similar symptoms, but no one could propose a reliable fix.

I verified that all the chips were inserted correctly and pressed them down firmly to ensure good contact. I inspected my soldering but didn’t find any missed solder joints. I double-checked the jumpers and found that a couple of them controlling the video settings were misconfigured. I corrected them, but nothing changed. Frustrated, I gave up.

Round 2

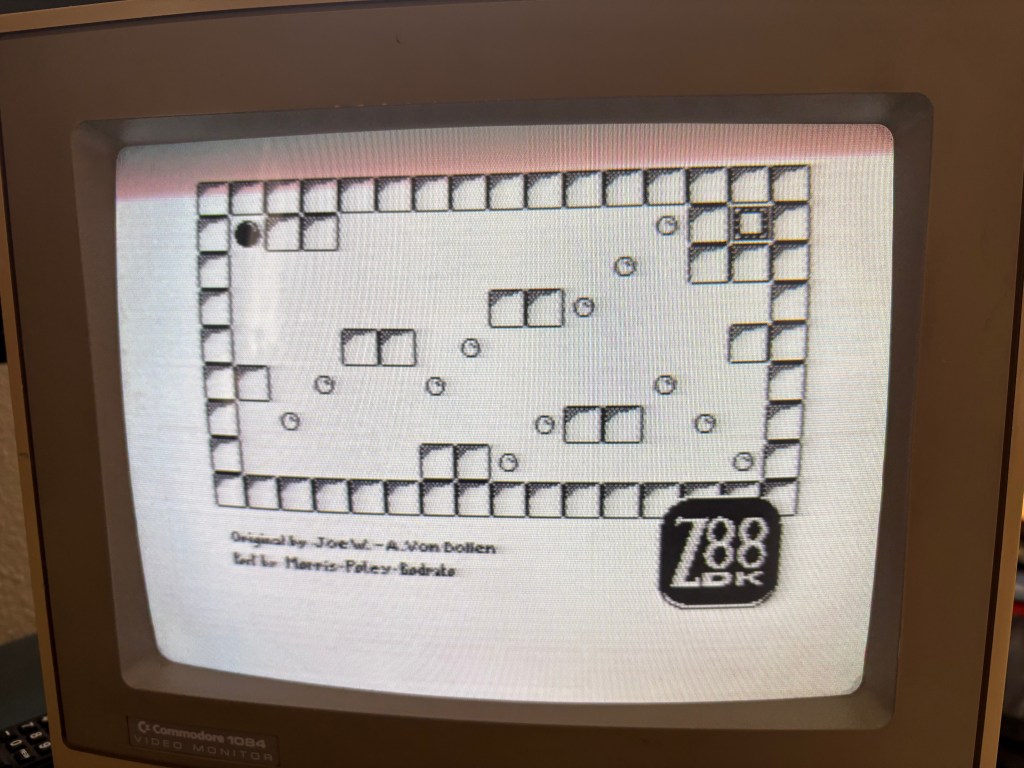

The problem haunted me. A week later, I went over everything again and confirmed I hadn’t missed anything. As I was out of ideas, I started playing with jumpers for ROM selection. To my astonishment, one of my changes produced this screen:

This meant the board had been working fine all along! It was just trying to run some garbage instead of a valid ROM program.

ROM

I turned to the guide to understand what was happening. Due to copyright, the kit doesn’t ship with the original ZX Spectrum ROM. Instead, it comes with the AM29F040B EEPROM, which is big enough to hold up to 8 different ROM images. The board uses jumpers to select the image to run. Apparently, some of the banks in my EEPROM didn’t have a valid ROM image, and my initial configuration happened to point to one of these banks.

Now that I understood the issue, the fix was easy: I needed to burn the ZX Spectrum ROM into my EEPROM. The only problem was that I didn’t have an EEPROM programmer. After some research, I settled on the XGecu T48 EEPROM programmer. When I got it, I was unpleasantly surprised: the official software only supported Windows, but I am a Mac user.

I sacrificed a lot for this project, but having to install Parallels would be too much. Fortunately, I found an open-source command-line tool called minipro that offered what I needed.

The Harlequin 128K board I built was compatible with both ZX Spectrum and ZX Spectrum 128. Because my EEPROM could hold multiple ROM images, I burned them both so I could easily switch between them.

Visual Minipro

While the minipro tool did the job, setting it up and understanding the options took some effort. A UI version of the tool would make everything so much easier. As nothing like this existed, I decided to build it myself. Because my tool is a minipro GUI wrapper, I called it Visual Minipro and made it available on the Mac App Store.

Finishing the build

I was delighted to see my board up and running, but two problems bothered me: the video quality was pretty bad, and I couldn’t use a joystick.

Video

The Harlequin 128K board offers two video outputs: composite and RGB. The RGB signal is intended for use with SCART, which has never been a thing in the US. Since none of my monitors supported SCART, composite video felt like a better choice. Besides, exposing the RGB socket would require cutting a hole in my case, which I didn’t want to do.

To get NTSC timings over composite video, I installed the NTSC crystal that came with the kit. It did the trick, but the result wasn’t great. The picture on my 1084S monitor was blurry, and when I tried to convert it to HDMI using my Retrotink 2X Pro converter, I got no color.

Joystick

In the 8-bit era, a joystick was a must-have device. Most games couldn’t even be played without a joystick. While the ZX Spectrum didn’t support a joystick out of the box, third-party joystick interfaces quickly filled the gap. The most popular one was the Kempston joystick interface.

The Harlequin 128K board has built-in support for the Kempston joystick interface. This is nice, but there are two problems: the ZX Spectrum case lacks a factory-made hole for a joystick port, and the board lacks mounting points for the DB9 joystick socket. So, even if I had modified the case, that case might not have been strong enough to provide solid support for the joystick port. Besides, I knew the DIY joystick hole would look ugly. So, I decided to look for something different.

ZX VGA Joy to the rescue

One popular way to get HDMI output on the ZX Spectrum is ZX-HD, an HDMI interface for the ZX Spectrum. It is a fine device, but it wouldn’t solve my joystick problem. So, I kept looking for alternatives and found something that fit my needs perfectly: ZX VGA Joy. This device could output digital video over HDMI and provided a joystick port compatible with the Kempston interface. As a bonus, it also had the reset button.

I ordered it, and couldn’t wait to try it. When it finally arrived, I wasn’t able to make it work with my setup reliably – the screen would go black soon after turning the Spectrum on. Quick debugging proved this was an issue with the computer – the composite video worked just fine. After reading more about the inner workings of ZX VGA Joy, I got a hunch about what was happening. ZX VGA Joy relies on PAL timings to generate the HDMI output. But my computer had the NTSC crystal installed. My hypothesis was that this difference was responsible for the glitch because the PAL and NTSC frequencies differ.

My guess was correct. Replacing the NTSC crystal with a PAL crystal indeed fixed the blank-screen problem, but it came at a cost. After installing the PAL crystal, I lost the ability to use the composite video. This wasn’t a big deal, though. Given the low quality of the composite signal, I wouldn’t want to use it anyway.

Final result

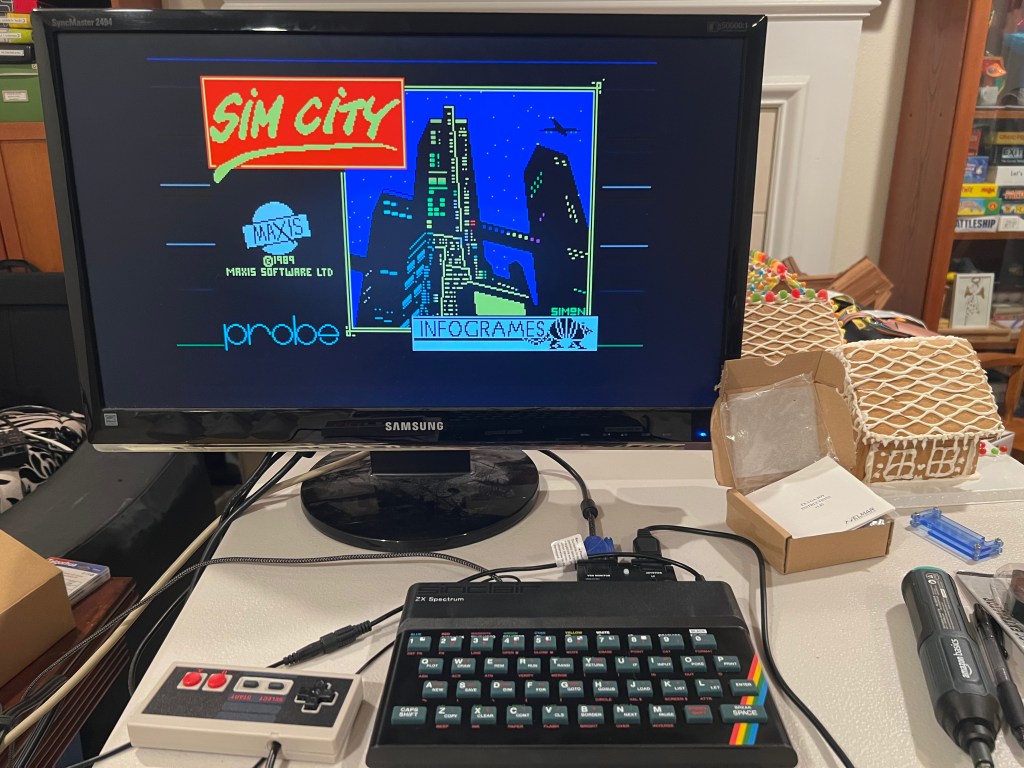

Here is the final result after putting everything together (with a modded Nintendo NES controller in lieu of a joystick and the original SimCity game humming happily on 48 KB of RAM).

Conclusion

There is no practical reason to build a 40-plus-year-old computer today. To be honest, even when I was starting, I didn’t expect to really use it. However, the process of building it, the interesting technical challenges, and the satisfaction of seeing it come to life were all well worth it.