If your on-call is perfect and you can’t wait for your next shift, you can stop reading now.

But, in all likelihood, this isn’t the case.

Rather, you feel exhausted by dealing with tasks, incidents, and alerts that often fire after hours, and you constantly check the clock to see how much time is remaining in your shift.

The on-call can be overwhelming. You may not know all the systems you are responsible for very well. Often, you will get tasks asking you to do things you have never done before. You might be pulled into investigating outages of infrastructure owned by other teams.

I know this very well – I have been participating in on-call for almost seven years, and I decided to write a few posts to share what I learned. Today, I would like to talk about handling incidents.

Handling Incidents ⚠️

Unexpected system outages are the most stressful part of the on-call. A sudden alert, sometimes in the middle of the night, can be a source of distress. Here are the steps I recommend to follow when dealing with incidents.

1. Acknowledge

Acknowledging alerts is one of the most important on-call responsibilities. It tells people that the on-call is aware of the problem and is working on resolving it. In many companies, alerts will be escalated up the management chain if not acknowledged promptly.

2. Triage

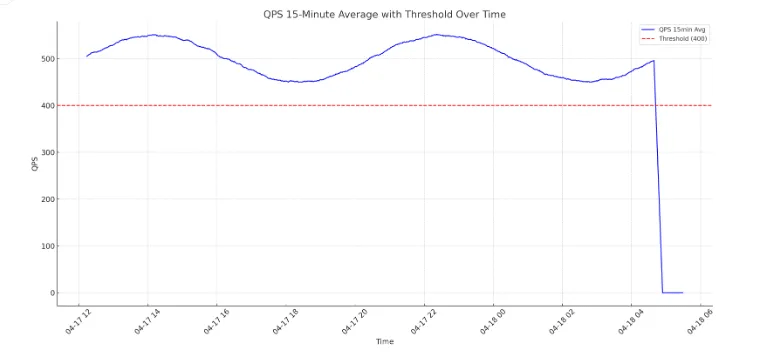

Triaging means assessing the issue’s impact and urgency and assigning it a priority. This process is easier when nothing else is going on. If there are active alerts, it is crucial to understand if the new alert is related to these alerts. If this is not the case, the on-call needs to decide which alerts are more important.

3. Troubleshoot

Troubleshooting is, in my opinion, the most difficult task when dealing with alerts. It requires checking and correlating dashboards, logs, code, output from diagnostic tools, etc., to understand the problem. All this happens under huge pressure. Runbooks (a.k.a. playbooks) with clear troubleshooting steps and remediations make troubleshooting easier and faster.

4. Mitigate

Quickly mitigating the outage is the top priority. While it may sound counterintuitive, understanding the root cause is not a goal and is often unnecessary to implement an effective mitigation. Here are some common mitigations:

- Rolling back a deployment – outages caused by deployments can be quickly mitigated by rolling back the deployment to the previous version.

- Reverting configuration changes – problems caused by configuration changes can be fixed by reverting these changes.

- Restarting a service – allowing the service to start from a clean state can fix entire classes of problems. One example could be leaking resources: a service sometimes fails to close a database connection. Over time, it exhausts the connection pool and, as a result, can’t connect to the database.

- Temporarily stopping a service – if a service is misbehaving, e.g., corrupting or losing data due to a failing dependency, temporarily shutting it down could be a good way to stop bleeding.

- Scaling – problems resulting from surges in traffic can be fixed by scaling the fleet.

5. Ask for help

Many on-call rotations cover multiple services, and it may be impossible to be an expert in all of them. Turning to a more knowledgeable team member is often the best thing to do during incidents. Getting help quickly is especially important for urgent issues that have a big negative impact. Other situations when you should ask for assistance are when you deal with multiple simultaneous issues or cannot keep up with incoming tasks.

6. Root cause

The root cause of an outage is often found as a side effect of troubleshooting. When this is not the case, it is essential to identify it once the outage has been mitigated. Failing to do so will make preventing future outages caused by the same issue impossible.

7. Prevention

The final step is to implement mechanisms that prevent similar outages in the future. Often, this requires fixing team culture or a process. For example, if team members regularly merge code despite failing tests, an outage is bound to happen.

I use these steps for each critical alert I get as an on-call, and I find them extremely effective.