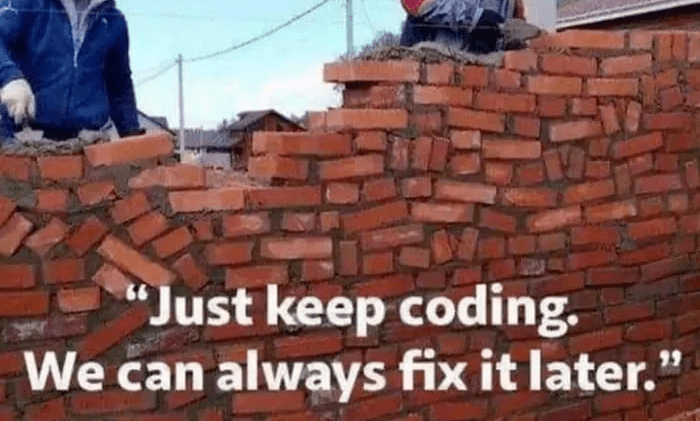

One thing I learned during my career as a software engineer is that leaving unfinished work to complete it “later” never works.

Resuming paused work is simply so hard that it hardly ever happens without external motivation.

Does it matter, though?

If the work was left unfinished not because it was deprioritized but because it was boring, tedious, or difficult, then more often than not, it does matter. The remaining tasks are usually in the “important but not urgent” category.

What happens if this work is never finished?

Sometimes, there are no consequences. Occasionally, things explode. In most cases, it’s a toll the team is silently paying every day.

From my observations, the most common software engineering activities left “for later” are these:

- adding tests

- fixing temporary hacks

- writing documentation

Adding test “later”

Insufficient test coverage slows teams down. Verifying each change manually and thoroughly takes time, so it is often skipped. This results in a high number of bugs the team needs to focus on instead of building new features. What’s worse, many bugs are re-occurring as there is no easy way to prevent them.

I don’t think there is ever a good reason to leave writing tests for “later.” The best developers I know treat test code like they treat product code. They wouldn’t ship a half-baked feature, and they won’t ship code without tests – no exceptions.

Fixing temporary hacks “later”

Software developers introduce temporary hacks to their code for many reasons. The problem is that there is nothing more permanent than temporary solutions. “The show must go on,” so new code gets added on top of the existing hacks. This new code often includes additional hacks required to work around the previous hacks. With time, adding new features or fixing bugs becomes extremely difficult, and removing the “temporary” hack is impossible without a major rewrite.

In an ideal world, software developers would never need to resort to hacks. The reality is more complex than that. Most hacks are added for good reasons, like working around an issue in someone else’s code, fixing an urgent and critical bug, or shipping a product on time. However, the decision to introduce a hack should include a commitment to the proper, long-term solution. Otherwise, the tech debt will grow quickly and impact everyone working with that codebase.

Writing documentation “later”

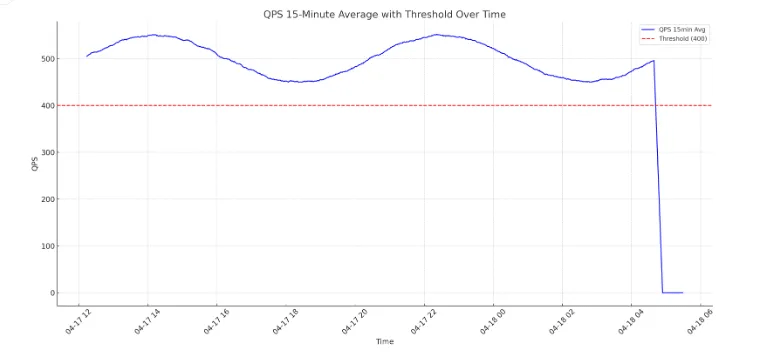

Internal documentation for software projects is almost always an afterthought. Yet, it is another area that, if neglected, will cause team pain. Anyone who has been on call knows how difficult it is to troubleshoot and mitigate an issue quickly without a decent runbook.

In addition, documentation also saves a lot of time when working with other teams or onboarding new team members. It is always faster to send a link to a wiki describing the architecture of your system than to explain it again and again.

One way to ensure that documentation won’t be forgotten is to include writing documentation as a project milestone. To make it easier for the team, this milestone could be scheduled for after coding has been completed or even after the product has shipped. If the entire team participates, the most important topics can be covered in just a few days.

How does “later” impact YOU?

Leaving unfinished work for “later” impacts you in two significant ways. First, it strains your mental capacity. The brain tends to constantly remind us about unfinished tasks, which leads to stress and anxiety (Zeigarnik effect). Second, being routinely “done, except for” can create an impression of unreliability. This perception may hurt your career, as it could result in fewer opportunities to work on critical projects.